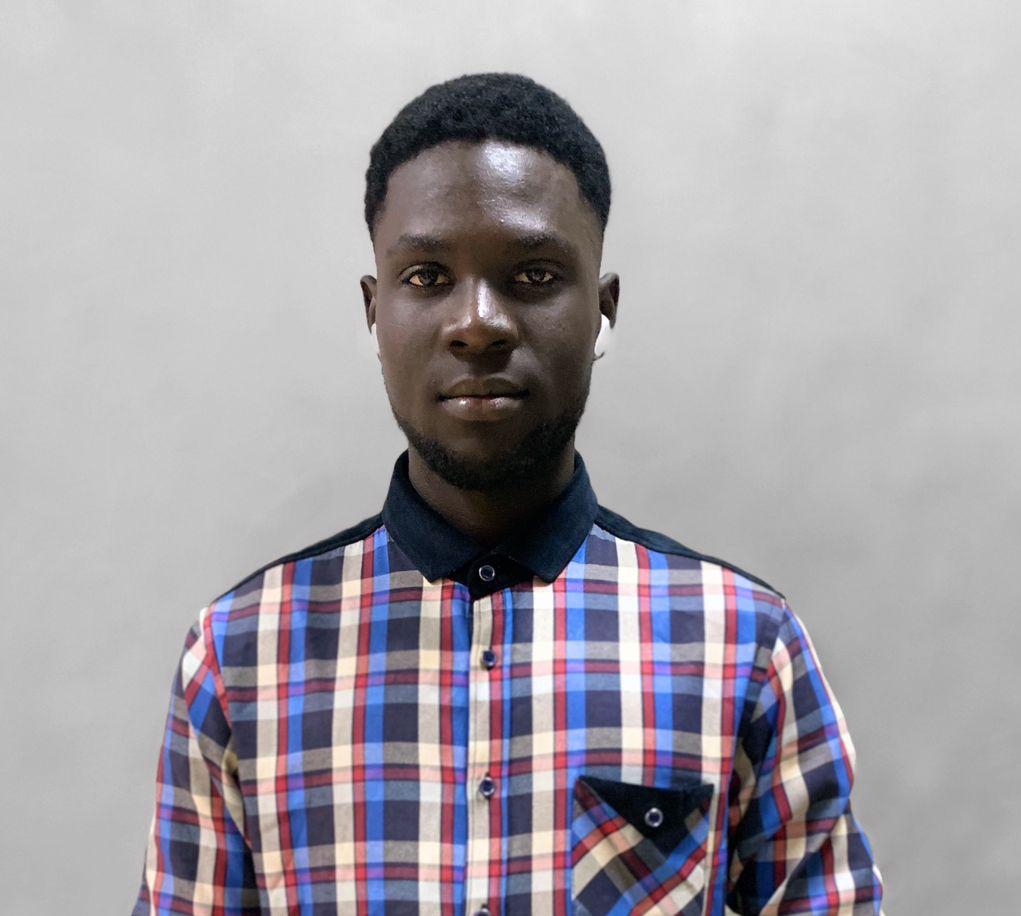

Hi, I'm Baimam Boukar 👋

I am a Master's student in Applied Machine Learning at CMU-Africa, building at the intersection of Software Engineering and AI Research. My current work focuses on Mechanistic Interpretability, AI for Space Systems, and Open Science. I am driven to understand foundation models from the inside out, building the scalable infrastructure to verify internal states and ensure reliable deployment in high-stakes scenarios

Research Domains

Mechanistic Interpretability

Analyzing internal model states to verify safety and move beyond simple behavioral testing.

AI for Earth and Space

Applying deep learning to geospatial challenges, from satellite imagery to atmospheric modeling.

Scalable ML Systems

Building distributed infrastructure for large-scale training, evaluation, and model deployment.

Engineering Rigor

Implementing reproducibility, testing, and CI/CD best practices to build robust research codebases.

Selected Work

View AllRecent Research

An Adaptive Latent Semantic Analysis Framework for Binary Text Classification

Binary text classification is a fundamental task in natural language processing, typically addressed using discriminative machine learning models or deep neural architectures. While Latent Semantic Analysis (LSA) has historically played an important role in semantic representation, it is now largely confined to a preprocessing function due to its static and task-independent formulation. This paper proposes an Adaptive Latent Semantic Analysis (A-LSA) framework that redefines LSA as a discriminative, class-aware classification model. The proposed approach constructs class-conditional latent semantic spaces and introduces a semantic differential distance as a direct decision criterion. Experimental results on benchmark datasets demonstrate that A-LSA achieves competitive performance compared to standard classifiers, while offering superior interpretability and significantly reduced computational cost (inference time 40x faster than BERT).

Zero-Shot Neural Priors for Generalizable Cross-Subject and Cross-Task EEG Decoding

The development of generalizable electroencephalography (EEG) decoding models is essential for robust brain-computer interfaces (BCI) and objective neural biomarkers in mental health. Conventional approaches have been hindered by poor cross-subject and cross-task generalization, owing to high inter-subject variability and non-stationary neural signals. We address this challenge with a zero-shot cross-subject decoding framework on the large-scale Healthy Brain Network dataset,benchmarking a convolutional neural network baseline, a hybrid LSTM, and a Transformer-based foundation model. To adapt the Transformer for regression while averting catastrophic forgetting, we propose a novel progressive unfreezing strategy. The baseline yielded an nRMSE of 0.9991, whereas our fine-tuned Transformer achieved 0.9799 on unseen subjects.This work establishes scalable, calibration-free EEG decoding for computational psychiatry and behavioral prediction.

Retrieval with Multiple Query Vectors through Anomalous Pattern Detection

A classical vector retrieval problem typically considers a single query embedding vector as input and retrieves the most similar embedding vectors from a vector database. However, complex reasoning and retrieval tasks frequently require multiple query vectors, rather than a single one. In this work, we propose a retrieval method that considers multiple query vectors simultaneously and retrieves the most relevant vectors from the database using concepts from anomalous pattern detection. Specifically, our approach leverages a set of query vectors Q (with |Q| ≥ 1), and identifies the subset of vector dimensions within Q that standout (anomalous) from the rest of dimensions. Next, we scan the vector database to retrieve the set of vectors that are also anomalous across the previously identified vector dimensions and return them as our retrieved set of vectors. We validate our approach on two image datasets, a text dataset, and a tabular dataset.